A little bit of background:

FreeNAS is composed of a pair of Hitachi 1tb 7200rpm, SATA 3gbps driives, in a ZFS Mirror. The network cards used are the onboard Realteks for iSCSI, and a 32bit PCI Realtek for managemnet.

ESXi server has two Intel PCI-X network cards dedicated to iSCSI

Both machines are connected to an HP Procurve switch, with a VLAN for dedicated iSCSI traffic.

The VM used for testing is a W2k8r2 machine that has data volume VMDK located on the FreeNAS.

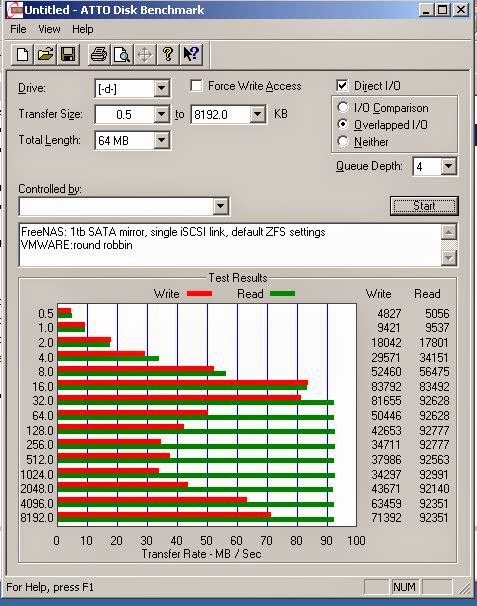

First test: Single iSCSI link on the FreeNAS, VMware pathing is set to MRU.

Just a chart showing the data transfer to both drive in the RAID set.

A chart showing the data transfer across the NICs, the one circled in red is the one dedicated for iSCSI traffic.

Second test: Single iSCSI link on the FreeNAS; VMware pathing set to Round Robbin. Notice it is WAY slower than MRU.

Third test: dual iSCSI links on the FreeNAS; VMware pathing set to Round Robbin. The results were not much different, that MRU.

This chart shows that both NICs are doing SOME data transfer.

Observations, questions, theories??!?!

I may have hit the ceiling on the transfer rate of the RAID1 SATA drives. It is possible that they cannot simply go any faster, and adding a 2nd NIC into the mix just isn't going to help. Also since all the load is being sent from a single VM from a single iSCSI server there isn't enough items hitting the NAS to take advantage of MPIO. The Realtek NICs are well, junk (that is even noted some of the FreeNAS documentation), would an enterprise NIC be any better? Ideally I would have two separate NICs taking the work load on instead of a "dual port NIC".

I like your graphs!

ReplyDelete