Time to build a new FreeNAS/TrueNAS server to be my backup target system.

The system to be used is a Lenovo TS140 workstation class machine. It has an Intel Xeon E3-1226 v3 CPU. It will probably exchanged to an Intel i-3 4160t CPU, as it has roughly half the wattage TDP. The 8gb of DDR3 RAM was replaced w/ 16gb unbuffered ECC RAM. An Intel i520 10gb NIC, a PCI-e to NVME and M.2 SATA SSD adapter was installed. The boot drive is a very old Intel 64gb 2.5" SSD, a 512gb SATA M.2 drive, and a 512gb NVMe drives were added. Interestingly the system does not seem to like 3rd generation NVMe drives; several were tried. The NVMe drive that ended up being used is an older Samsung SM953 drive that seems to not have issues with older systems. Some used HGST 10tb 7200rpm hard drives were acquired. Initially they were set up in a mirror. The Lenovo has a total of five SATA ports, so the M.2 SATA SSD is removed, four 10tb SATA drives is not an option. Just for clarification, SATA0 goes to Intel 64gb boot drive, SATA1 goes to M.2 SATA SSD, SATA 2~5 feed the spinning hard disks.

With two drives installed the system consumes roughly 45watts at idle. Initial testing was not very scientific. From a Windows VM, housed by a VMware server, that lives on NMVe storage, and on a 10gb network. A folder was copied containing two 5gb ISO images, observed the min, max, and average speeds and timed with a stop watch Yes I admit it, there is lots of room for errors and subjectivity. The NAS was rebooted after each test to make sure the ZFS cache was empty.

Later a third drive was added, using RAIDz1 configuration, basically the same thing as RAID5. Note one cannot easily migrate storage types, the existing array needed to be destroyed, then recreated with the three drives. The wattage consumed in this configuration jumped up to 53watts.

Mirrored SATA: Max: 330Mb/s, Min: 185Mb/s

NVMe: Max: 420Mb/s, Min: 350Mb/s, 45 seconds

M.2 SATA SSD: Max: 360 Mb/s, Min: 300Mb/s, 49 seconds

RAIDz1 SATA: Max 400Mb/s, Min: 290Mb/s, 65 seconds

Another system? An opportunity to acquire a Dell PowerEdge R320 presented itself. The advantages of this over the Lenovo is that it has four 3.5" hot swap trays. Remote management via Dell's iDRAC system. Dual power supplies, more ram (it comes with 48gb DDR3 ECC composed of three 16gb sticks, plus three more open slots, for a total of 6 vs. 4 on the Lenovo. The CPU is an Intel E5-2640 v2. Lower power versions of compatible CPU's might be worth acquiring later on.

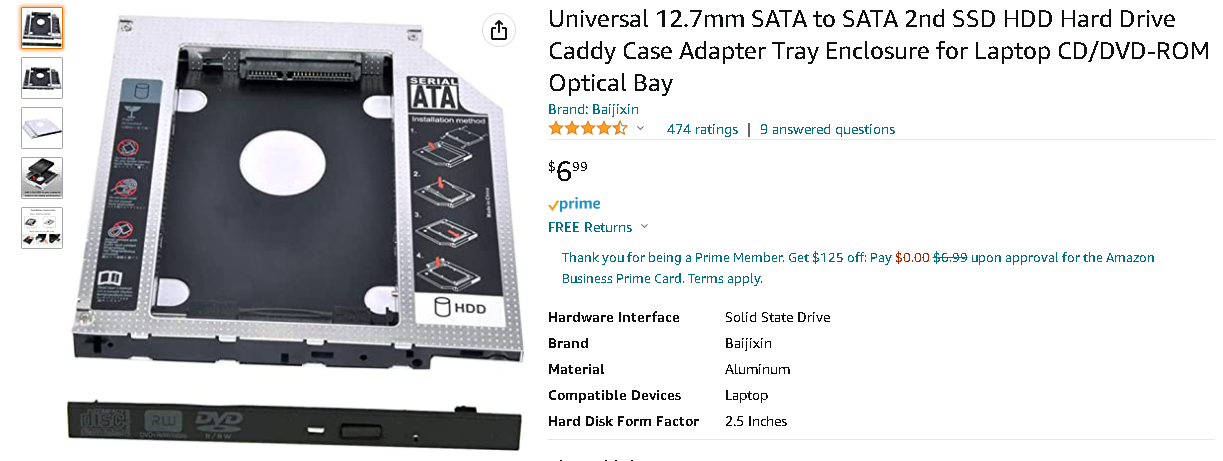

The PowerEdge has one SATA port, plus the SAS 8087 connector that feeds the four ports on the disk backplane. The one single SATA port goes into a notebook optical drive to 2.5" hard drive adapter which houses another Intel 64gb SSD for booting. However, this means I don't have any unused SATA ports to feed the M.2 SATA SSD drive.

With two of the same 10tb SATA drives, it consumes 70 watts, according to iDRAC, not measured with the Kill-A-Watt. With four drives it jumped up to 84 watts. According to the Kill-A-Watt the four drive configution the NVMe drive consumes 100 watts at idle. FWIW with the system off, but plugged in the machine consumes 16 watts of power.

This system came with a Perc H310 Mini Monolithic RAID card. These cards do support hard drives in "non-RAID" mode. It is not entirely clear how that differs from normal drive, but a hard drive with such a configuration is directly readable in a normal PC and visa versa. A bit of research shows that the SMART info from the drives will not be passed along to the OS.

People have tried to flash these into "IT-Mode" (making it a normal SAS/SATA HBA), most of those same people end up bricking the card! Art-Of-The-Server has figured out how to re-flash it properly; also sells them pre-flashed on eBay, for roughly $50.

The motherboard has the same SAS connector as the H310. The Perc was removed from the system and SAS/SATA cable moved the cable from the Perc card to the motherboard. Windows 2019 was installed, benchmarked the I/O, and the results were pretty close. So that being said for now there doesn't seem to be any reason to spend time attempting to re-flash the Perc into IT-Mode. For the time being only SATA drives will be used. One downside to using the onboard SATA controller is that iDRAC is completely ignorant of the drives in the system; one cannot log into iDRAC and verify drive health.

two Perc H310 Mini Monolith RAID cards

When configuring for four drives there is basically have two options. RAIDz2, which is similar to RAID6, where it has two parity bits, meaning with four drives 50% of the capacity is available and two drives can go bad. The other option is to do RAID 10, kind-of.....one has to create one Pool that contains two mirrors. So the first two drives are mirrored, the 3rd and 4th drives are a mirror. The two mirror groups are stripped. Again 50% of the capacity is retained, possibly two drives can fail, but if the wrong two fail the whole set is trashed.

Mirrord SATA: AVG: 200Mb/s, Min: 185Mb/s

RAIDz1 SATA: AVG: 300Mb/s, Min: 220Mb/s

RAIDz2 SATA: AVG: 300Mb/s, Min: 220Mb/s

Dual Mirrors SATA: AVG: 350, Min: 250Mb/s

Windows VM during mirrored test

Windows VM during M.2 SSD test

Windows VM during RAIDz2 test

Windows VM during NVMe test

Network Card reporting during RAIDz1 test

Network Card reporting during NVMe test

The Lenovo and the Dell R320 can not truly be compared in terms of speed, as the systems were tested in different environments, different switching infrastructure, different test VMs, etc. However it does give a rough idea. Going forward: iSCSI targets will be setup, put a VM on that target and do an actual I/O test. Also on the docket is to test with various forms of cache. Since the primary purpose of this server is a for being a backup target, and MAYBE shared storage for VMware, the L2Arc cache does me no good. It might be advantageous to have the log files live on faster storage; however using a 512gb NVMe drive is way over kill, since using a 10gb NIC it will never use more than 20gb, and the entire device must be allocated to the Log vDev.