***Work in Progress, will continue to update until the project is finished****

Dell PowerEdge r930 is a 4U, four CPU system. This is some notes from working on them. Finding information on them was sparse, so hopefully someone else can benefit from my research. The biggest help was this source: from Dell. This link as well.

When using four CPU's the minimum RAM configuration is eight memory modules. One stick of RAM in the #1 back of each of the eight memory riser cards.

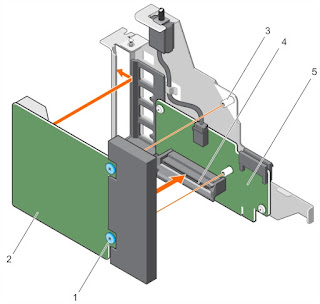

It doesn't matter so much what hard drive controller is used, but it MUST bee in the very first slot. Remove the I/O shield put it into slot one and use the lever to secure it down. In this case it is a single Perch 730H.

The network card is the same "form factor" as found on other r630/730's..it has the proprietary connection. There is a special riser card that takes the special NIC and converts it to PCIe. That card also has a special slot for one to install the SD memory card modules.

ONE HBA/RAID card in a 24 drive system: the SAS cables from port A go to port A on the SAS Expander, as is the same for port B. The top SAS port from the backplane goes into the furthest port on the SAS Expander. The bottom SAS port on the backplane goes to the closest port on the SAS Expander. Use the wrong cables or in the wrong spot, the system will complain.

Parts needed:

-backplane

-SAS expander

-backplane SAS cables

-SAS cables from HBA/RAID to SAS expander

-front bezel