Turns out when one order 0.5m cable it might not actually be a half meter. In this case two cables one from Mellanox the other from FS.com. Obviously someone isn't right here. Apparently one vendor includes the length of the transceiver in their overall length vs just the cable. Anyways, buyer beware as it can make a difference.

Ramblings of an IT Professional (Aaron's Computer Services) Aaron Jongbloedt

Cable Length is relative....

vCenter Backup/Restore and "Upstream Server not found"

I learned somethings on Virtual Center 7.x this weekend. Here are a few key take aways that hopefully helps someone else out there:

Restoring Virtual Center using Veeam

If one needs to restore a vCenter using Veeam; and assuming that Veeam was setup where the vCenter was added to Veeam, like most environments, versus having each ESXi host added to Veeam. Veeam cannot restore vCenter the same way one would normally do a VM restore. Since during the restore process Veeam NEEDS to communicate with the now non-existent vCenter. The restore cannot happen and the process will waste great amounts of time.

The work around is to first add a single ESXi host to Veeam, add that host using the IP (assuming it the friendly name is known by Veeam; the point is we are tricking Veeam this is a new ESXi server. Then restore the vCenter backup to an alternate location...aka this "new" server. One isn't really restoring the VM to an alternate location, one can totally restore over the top of the existing VM, this is just a necessary step to get past the requirment of having to circumvent communicating with a vCenter that is currently not functioning.

Restoring Virtual Center using built-in restore options.

For those you who are using vCenter's built in backup feature, the restore process works as follows. Start the vCenter installation process, choose "Restore", give it the backup repository details, and follow the rest of the prompts. What happens is a new vCenter VM is deployed and the backup information is replayed into it. HOWEVER, the backup files and the install media need to be on the very same version. For me, I attempted to use vCenter 7.0.3-209 installaton media, and my broken vCenter started out life as a v7.0.1x , then upgraded several times and currently on 7.0.3.01000. It would not restore and complained about a versioning mismatch. I did not bother trying to acquire previous versions of vCenter to attempt the recovery. Apparently, one should keep the install media around, and when patching vCenter, also acquire the full ISO to install from.

VCenter Error when logging in: "No Healthy Upstream Server"

For some unknown reason my vCenter quit working and I was presented with the no healthy upstream server message when attempting to log in. The error message is very vague and somewhat of a "catch all". Hence, there is no one golden fix out there, but a dozen different fixes. Many people give up and just re-deploy from scratch.

I could log into the appliance administration page, where I attempt to start the non-running services with no luck, restarting the appliance didn't work.

My server the "VMware vCenter Server" (VMware-vpxd service) would not start, it would process for a good two minutes before failing.

A few usefull commands to type in at the shell:

service-control --list <--list all of the running services

#service-control --start --all <--start all services #service-control –-start {service-name} <--start a specific service

DNS both forward and backwards worked, resolution with the FQDN and short name worked. Disk space was not an issue, as mentioned by dozens of others. I did attempt to change my IP settings as recommended but some sort of GUI bug prevented me of doing that. Changing the IP via console did not help. NTP did not seem to be an issue.

An attempt to upgrade vCenter, thinking the upgrade would replace/repair any broken bits, was tried. This made things way worse, and the upgrade failed; so I had to roll back.

I downloaded and ran python script from a VMware KB to check my SSL certificates, and they passed.

The logs to vmware-vpxd are found here: /storage/log/vmware/vpxd-svcs/vpxd-svcs.log

After several hours of beating on it, I found several other pages talking about certs. and a different way to check for cert. valitity. This check showed mine as being failed. Turns out the .py script I ran previously, actually lead me astray, I did indeed have expired certs.

for i in $(/usr/lib/vmware-vmafd/bin/vecs-cli store list); do echo STORE $i; sudo /usr/lib/vmware-vmafd/bin/vecs-cli entry list --store $i --text | egrep "Alias|Not After"; done

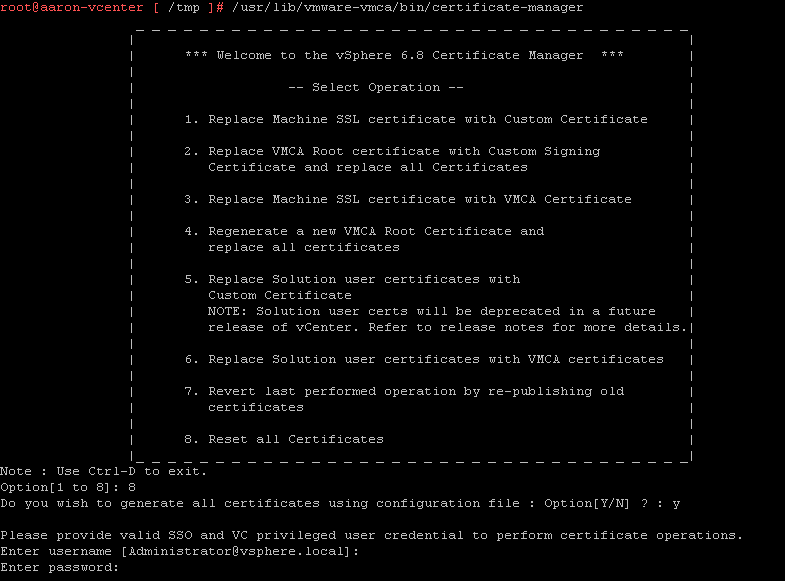

Running the Cert. Tool contained within vCenter to reset all the Certs fixed my issue. FYI it does take quite a while to run.

/usr/lib/vmware-vmca/bin/certificate-manager <---to launch the cert. utility; #8 to reset the cert

Post Recovery

VMware & NVMe drives

The VMware HCL (Hardware Compatibility List) has always been a problem for those of us on a tight IT budget; no matter if it is for a business or home-lab. Turns out the selective approval of the HCL also applies to NVMe drives. I have been a fan of using PCIe to NVMe adapters and a NVMe gum-stick drive in older hardware for higher IOPs loads, caching tier such as vSAN, or even just for swap space. See some of my previous posts on this subject.

My last home-lab upgrade, I had allocated a specific NVMe drive to use because of the price and speed. I couldn't get that drive to work. I assumed it was because it was a Generation 4 drive and was just too new to use on my older HP G8. An older enterprise drive was purchased second hand, that I knew from previous expirence would work

Months later, almost the exact same issue cropped up on a Dell PowerEdge r730. This time after some Google-Fu and reading William Lam's blog post it turns out the that the drive I was attempting to use simply wasn't in the HCL list, and therefore ESX was not going to accept it.

ESXi would see the memory controller, but not address the drive:

Running this command one would not see the device:

"esxcli storage core path list"

Also running this command, one would not see the device:

"esxcfg-scsidevs -l"

Running this command one could see the memory controller:

"esxcli hardware pci list"

In my case it saw the HP EX950 as:

VMkernel Name: vmhba5

Vendor Name: Silicon Motion, Inc.

Device Name: SM2262/SM2262EN SSD ControllerConfigured Owner: VMkernel

Current Owner: VMkernel

Vendor ID: 0x126f

Device ID: 0x2262

SubVendor ID: 0x126f

SubDevice ID: 0x2262

Device Class: 0x0108

Device Class Name: Non-Volatile memory controller

In the end the HP 950 NVMe drive was pulled out and a Kioxia KXG60ZNV256G which has a Toshiba brand controller was installed. Things worked as expected.

The forums did talk about replacing a driver with an older ond from ESXi v6.7.0, but I decided to avoid all that for fear of loosing access to the drive after various updates.

ALSO! It turns out ESXi does not like drives that are formatted to 4k! I had a drive that ESXi would see, but could not provision as storage. Deleting the name space and recreating it formatted to 512b and it worked just fine.

RAID comparisons with SSD's

Cisco Switch Reset

This is one of those things I need to once in a while, and each time I have to do it, I need to re-look up how the steps. So these notes are mainly for my future self!

To factory reset a Cisco switch, when the password is unknown!

1. Obtain a console cable to connect to the switch. Typically the pale blue rj45 to serial cable, other times a Micro USB cable.

2. Open a terminal connection to the switch, on a PC I reccommend either Putty or TeraTerm.

3. Power on the Switch while holding the "Mode" button down; until the "syst" light will flash, and in the terminal window will show: "The password-recovery mechanism is enabled".

4. In the terminal window type: del flash:config.text

5. Then del flash:vlan.dat

6. Then reset

The system will reboot and have no configuration, it will present one with the wizard to setup new passwords and basic setup.