This came through during some e-wasting recycling. It is a 4gb hard drive (yes 4gb, not 4tb....CIRCA 2001?), but it is has a mechanical/spinning platters! It appears to be in the Compact Flash form factor. The card it is plugged into is a USB adapter. Some searching says it is mostly likely from an early PlayStation memory expansion card.

Jungle-Information Technology

Ramblings of an IT Professional (Aaron's Computer Services) Aaron Jongbloedt

10gb network card

I ran across this and had to research what the heck it was. I don't think I have ever seen a connector like that before. Turns out it's a 10gb network card, this particular one is a Chelsio.

Dell A/I GPU Server: Dell PowerEdge C4140

What's inside a 100gb DAC cable?

Ever wanted to know what is inside of a 100gb DAC cable? I had a defective one finally come through so I opened it up.

Lenovo SR655 Server: IPMI/BMC Password & firmware

Early versions of this server have at least one flaw. If one has a server and doesn't know the password for IPMI access (the default username is: "USERID") there is no way to change it! One can go into the BIOS and go to change the account...one must actually type out the user account, as there is not display or drop down box, so literally there could be 10 usernames on the system. Typing in USERID, then typing in a new password, save, exit, test.....no dice, it will not work! OK, so go back into the BIOS, create a new user with a new password, save, exit, no dice, it will not work! It wasn't until AFTER updating the firmware, that I could create a new user, and get into the IPMI. These servers use the REDFISH platform, so other tools SHOULD work.

Also when setting the IP address, pay close attention to which NIC one is using.....one is for the dedicated NIC for BMC the other one is for a different NIC. In this case this server only has not other NICs so I don't know where it goes to. I have added in a pair of 100gb NICs so maybe it's riding on top of those?

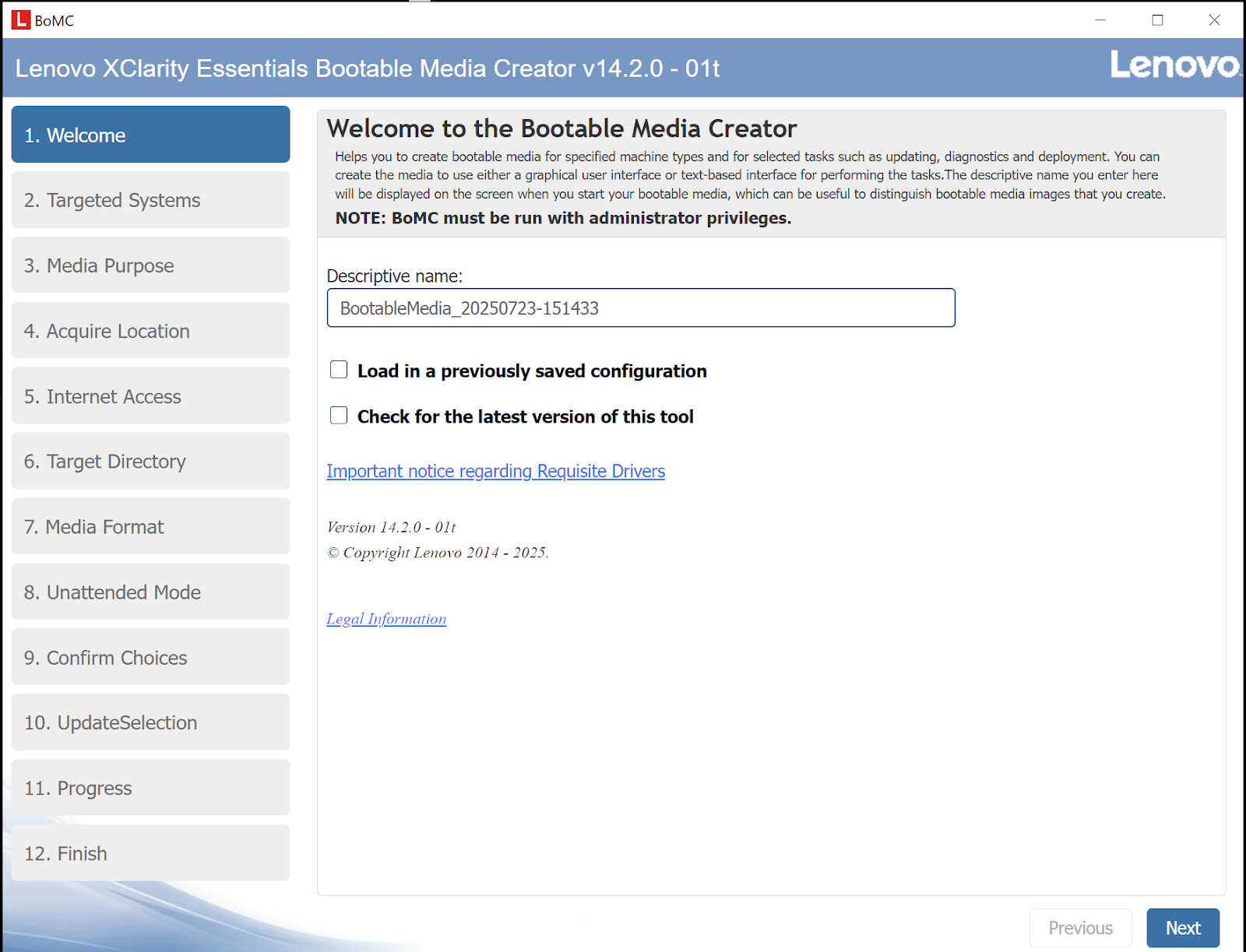

The easiest way to update most of the firmware on these machines is to acquire a utility called "Lenovo XClarity Essentials Bootable MEdia Creator". In this case, download and install on a Windows machine; go through the prompts, it will download all of the latest firmware for the server one chooses, and makes it into a bootable ISO. From there load it onto a USB drive or whatever. FWIW I haven't tried it on Ventoy yet.

Dell PowerEdge r640: on board SATA ports

Often times I choose to utilize the onboard SATA port on my servers. Usually it is because I want the boot OS drive to be something outside of the RAID controller. Maybe it is because all of the drive bays are in use for data drives, maybe it is distribution of I/O load, being less reliant on a specific controller, or even simplicity.

On the Dell r640 is has a "new-ish" connector. SFF-8611 Cable OCuLink I have seen them in use in SuperMicro's. I guess these are intended for PCI-e communications, however Dell decided to use them for SATA connectors. That being said I was able to track down a "Oculink PCI-express SAS SFF-86114i To 4X SATA Server High-speed Conversion Cable". This did not work. I broke down and paid a premium to get a specific Dell cable. Apparently the pin-out is different. On the r640, this would normally be used for the optical drive. This particular machine didn't have a optical drive bay, so that cable didn't help me. I did eventually find the MB SATA cable PN 0VDHV7

SuperMicro IPMIView

IPMI (Intelligent Platform Management Interface) is a pseudo standard for managing, accessing, and configuring servers. Companies like SuperMicro, Quanta, Celestica, are petty good about sticking to those standards, even HP and Dell allow limited use of them. One might also see/hear the term BMC, which is really just the hardware that runs the IPMI interface.

When dealing with older hardware it can be a real PITA to administer machines. Things like getting the remote KVM/Console to work with modern day browsers can be a real headache. Each manufacture, and often each model have their own idiosyncrasies.

IPMItools, is a software package one can download, install, and issue commands a remote machine. Think of it like 'PowerCLI" for the BMC. Now enter SuperMicro's IPMIView. It takes IPMItools one step further. It is a GUI that allow one to add in many systems into a control panel, flip through those systems, and do remote access, management, and configuration. IMHO the biggest feature is having an easy way to get at the remoteKVM/console of the machine. No more making SSL and security exceptions for each machine one administers. Hence the name, this tool is made for SuperMicro systems, however since it operates with industry standards it also functions with many other manufactures.

https://www.supermicro.com/en/solutions/management-software/ipmi-utilities

IPMItools does require Java to run, and it make require some initial security settings, but it beats making changes for every single machine. Each system is a bit different so somethings work, some don't....IE hardware monitoring.

Firmware updates of Dell PowerConnect 6224/6248 switches

Who fools around with switches that are over 15 years old? Well I do! They still work, and when basic 1gb connectivity is all that is needed they still work, why not? Besides new 24 port managed switches aren't exactly free.

Often the firmware on these switches is so old and insecure, that even if the Web GUI is enabled it's a struggle to get it function properly. Thus it is necessary to do the operation from either an SSH session or preferably the local console/serial port. The newest firmware v3.3.18 is from 2019, so it isn't great in terms of being updated, but it's better than the original 2007 code!

Here is the basic steps to update the firmware on them.

1. Obtain and unpack the firmware from Dell. Make note of the location of the files. https://www.dell.com/support/home/en-us/product-support/product/powerconnect-6224/drivers

2. Obtain and install a terminal software (i.e. Putty or TeraTerm). Connect to the switch.

3. Obtain and install a TFTP server software (i.e. TFTpd64), point the server to the location of the unpacked firmware files.

4. from the SSH session, enter these commands:

-en

-copy tftp://address-of-tftp-server/firmware-file-name.stk image

-show ver (make note of which image has the newer firmware)

-boot system image1 (or image2 depending on your system)

-copy running-config startup-config

-reload

5. IF GOING FROM FIRMWARE v2.X TO v3.X; During the boot process, choose option #2 to get the alternate boot menu, then choose option #7 "update the boot code", then normal boot. Failure to do this will cause the system to boot loop.

Dell PowerEdge r520 dual vs single CPU

If one every decides to add a 2nd CPU to a Dell PowerEdge r520, besides the CPU, CPU sink, extra system fan, one also needs a different PCIe riser card.

What?

Turns out the riser cards are different! If one puts a 2nd CPU in to a system without replacing the riser, the system works, however the system LCD will be amber, flashing, and giving an error: :HWC2005 system board riser cable interconnect failure". The machine still functions just fine, it just has the annoying alerts.